Brief introduction to TensorFlow

- Open source

- A second-generation machine learning system

- Developed by Google

- Improving flexibility and portability, speed and scalability

- A framework for implementing and executing machine learning algorithms

- In the form of a tensor flowing over a Graph

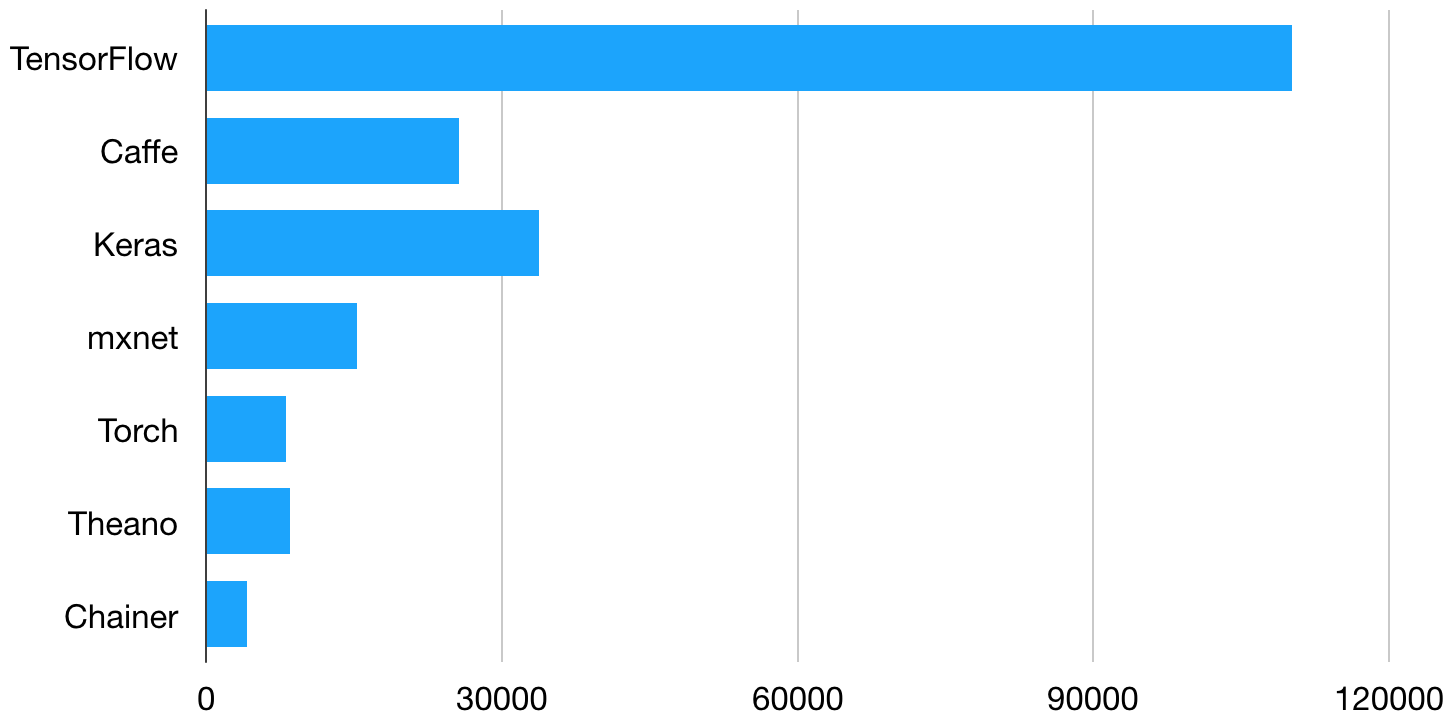

Stars of open source Deep Learning platforms in GitHub

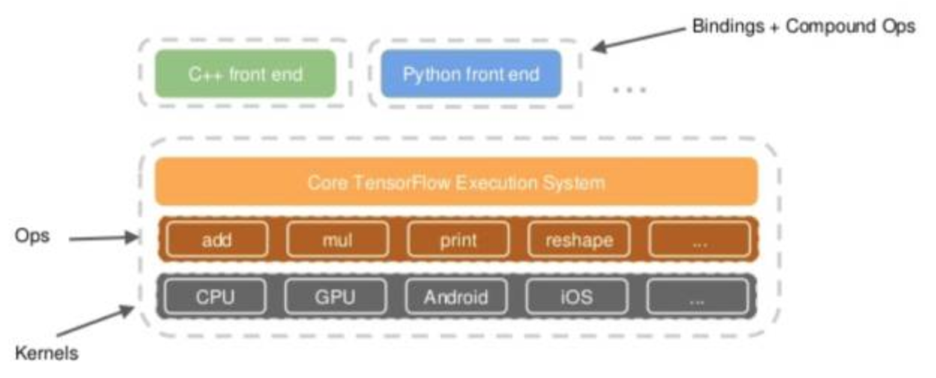

The architecture of TensorFlow

-

Front-end: Provide programming model, responsible for the construction of computational graphs, Python, C++ and other language support.

-

Back-end: Provide the runtime environment, responsible for executing the calculation diagram, and using C++.

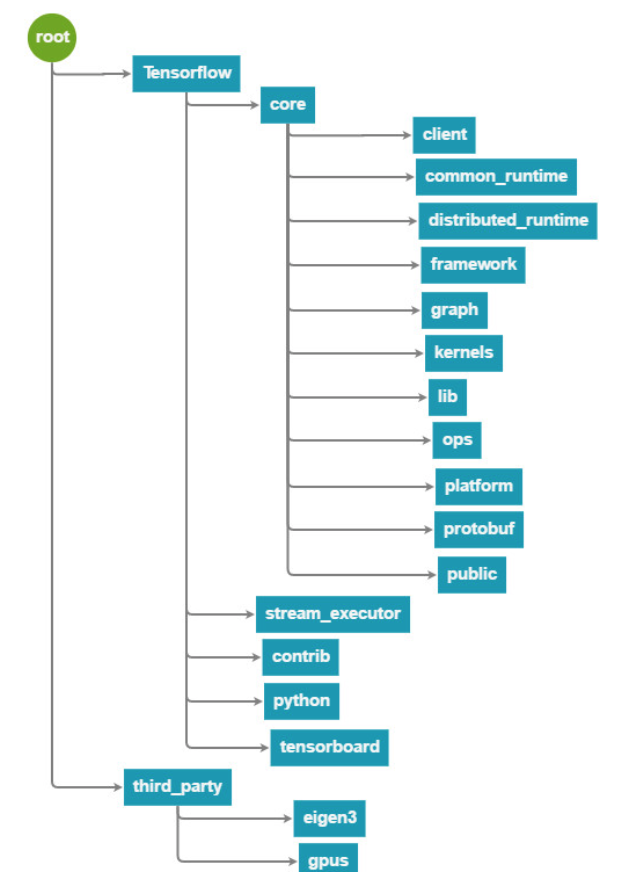

Code directory organization structure

- graph: Calculate flow graph related operations, such as construct, partition, optimize, execute, etc.

- kernels: Opkernels, such as matmul, conv2d, argmax, batch_norm, etc.

- ops: basic operations, gradient operation, IO related ops, control flow and data flow operation.

- eigen3: eigen matrix operation library, TensorFlow foundation operations’ call.

TensorFlow programming mode

- TensorFlow uses symbolic programming.

- Symbolic programming abstracts the calculation process into a graph, and all input nodes, operation nodes and output nodes are symbolized.

- Symbolic programming is more efficient in memory and computation.

- Symbolic programming programs either explicitly or implicitly contain compilation steps, wrapping previously defined computational diagrams into callable functions, whereas the actual calculation occurs after compilation.

Basic concepts of TensorFlow

- Use Graph to represent the calculation process.

- Execution diagram in Session.

- Using Tensor to represent data.

- Using Variable to maintain state.

- Use Feed and Fetch to assign or extract data from any operation.

Graph is a description of the computation process and needs to be run in Session.

TensorFlow provides a Feed mechanism to import data from outside, in addition to using Variable and Constant to import data.

Tensor, that is, any dimension of data, one-dimensional, two-dimensional, three-dimensional, four-dimensional data collectively known as tensor. TensorFlow refers to keeping data nodes unchanged and allowing data to flow.

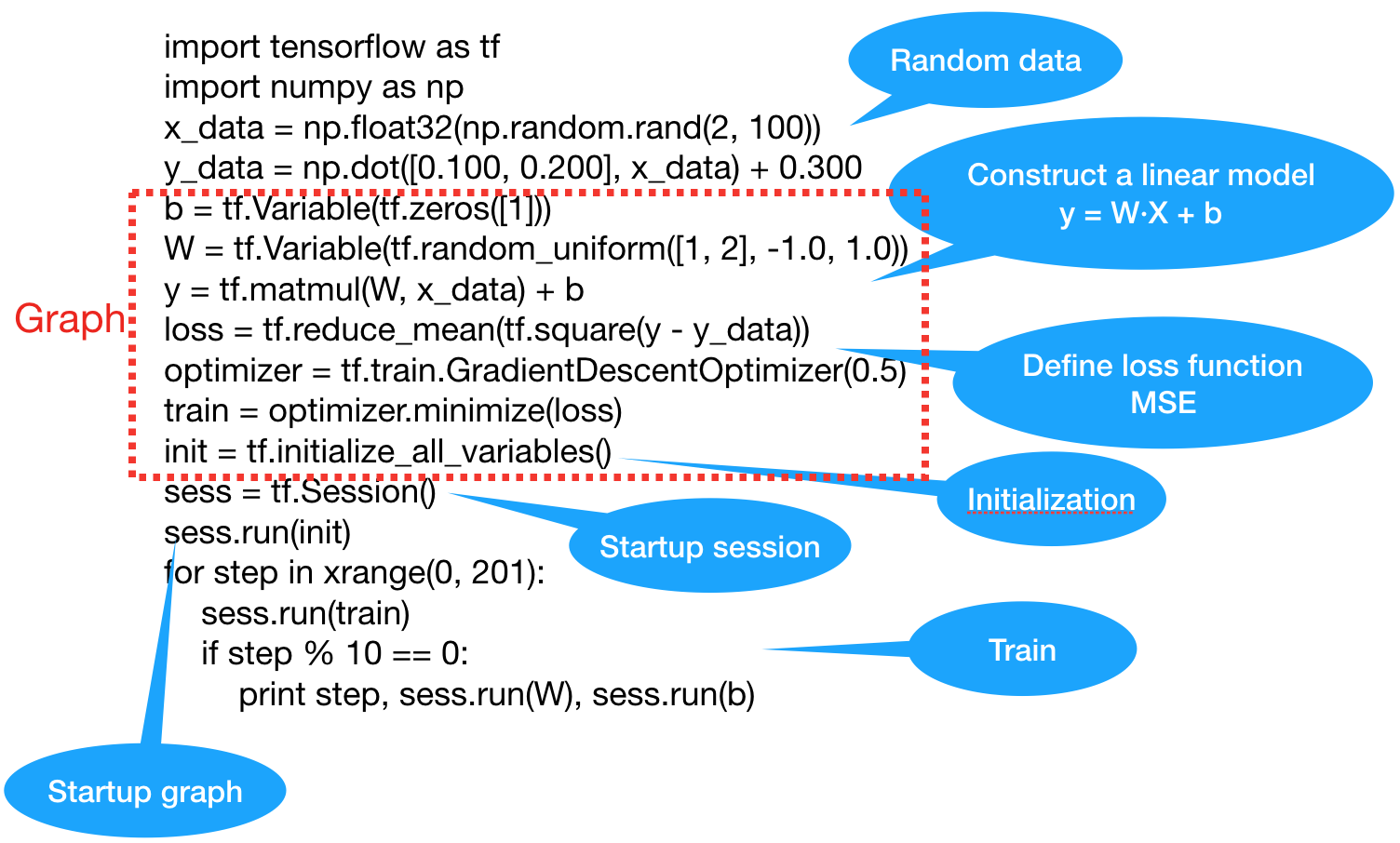

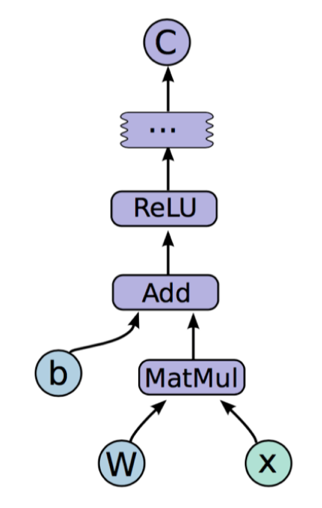

An Example:

TensorFlow implementation process

The construction of a graph

Creating a graph to represent and train the neural network in this phase.

The execution of the graph

The training operations in the diagram is executed repeatedly at this stage.

Brief summary of TensorFlow

TensorFlow is a programming system that represents computation as a graph. The nodes in the graph are called ops (operation). A ops uses 0 or more Tensors to generate 0 or more Tensors by performing some operations. A Tensor is a multidimensional array. For example, you can represent a batch of images as a four-dimensional array [batch, height, width, channels], with floating-point values.

TensorFlow uses the tensor data structure (which is actually a multidimensional data) to represent all the data and pass it between the nodes in the graph calculation. A tensor has a fixed type, level, and size, and you can refer to Rank, Shape, and Type for a deeper understanding of these concepts.

The link of this page is https://blog.nooa.tech/articles/717ad116/ . Welcome to reproduce it!